Building a CPU to compete with Intel?

The discussion then moved back to CPUs because, after all, Kirk believes that AMD's days are numbered. The question is: will Nvidia build a CPU to balance the market out if AMD's future is as gloomy as Kirk makes out? After all, Nvidia's APX2500 is a system on a chip and features an ARM-based CPU core on die, so it's not as if the company can't build a CPU... it's just a matter of whether it wants to, right?“Building a CPU is not that hard,” claimed Kirk.

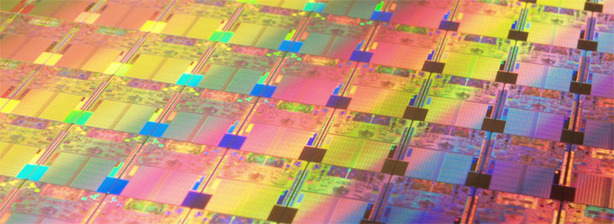

“What Intel does well is not just building good CPUs, it’s the fact that they’re a vertically integrated manufacturing company – Intel’s in the business of turning sand into money.

"In the middle of that there is the semi-conductor process and the design of the CPUs, but really if you took away the process advantage that Intel had, they would be behind AMD pretty consistently in terms of architecture. They win because they build an efficient and faster process. Who was the last CEO of Intel?” he asked.

Nvidia's APX2500 development handset.

“That’s right, he’s the process guy," David said. "So for the last ten or twelve years, that’s when they’ve really been excelling. Architecturally though, they’ve been behind AMD for some time – the Athlon architecture is a better architecture because the integrated bus controller is a better approach.

Intel is finally going to catch up with Nehalem." I thought his assessment of the Athlon being a better architecture was a little bit off when the Core 2 Duo is so much faster than the current AMD processors on a clock for clock basis, but there's no denying that it's a more elegant architecture.

“Anyway, back to your question about us building a CPU,” said David in an attempt to bring the discussion back onto the topic. “I think that if we were to compete with Intel, we would need to become a vertically integrated company and have our own fabs.”

Intel's process technology advantage

So, with this in mind, if Kirk has underestimated Intel and it builds a GPU that is very competitive, will Intel’s process technology advantage be a problem for Nvidia? “Well, definitely maybe,” he said. “The reason I would say maybe is: are they willing to take their most advanced process technology capacity and use it on GPUs? GPUs are much bigger than CPUs, so in the same capacity that they could build 100 million CPUs, they could build 20 million GPUs.“So taking into account how much money you can make on a Core 2 Duo, can you make five times more from a GPU?” asked Kirk. No, of course not, I said. But having said that, surely Larrabee is an indication that Intel feels that massive threading is the future. “I think massive threading is the future, but that doesn’t mean they’re going to build a competitive GPU,” he responded.

“Think about where Larrabee is going to sell – is that going to be a high-end product?” Of course, it’s difficult to say without actually knowing what Larrabee is at this point in time. “OK, well think about it this way: I’m guessing that it’ll probably cost them about one thousand dollars a chip to build Larrabee.

Kirk believes that Intel's process technology advantage might play a role in their impending war, but he's sceptical of whether Intel would commit to making such large chips on its latest silicon technology.

“What I think about Larrabee is that Intel is struggling with falling ASPs in their market because many people are starting to realise that the CPU is adequate for most things they want to do. Intel looks around and they say ‘ok, there’s some billion dollars over there, those ought to be mine!’” said David while laughing. “There’s no logic to it other than that ‘it’s my money over there and I want it’.

“They’re the world’s leading designers and manufacturers of CPUs – how hard could it be to build a GPU? I mean, come on, how hard could it be? That crummy little company down the road builds them – we could build them in our sleep. Come on, how hard could it be?” asked David in a rather rhetorical fashion. Of course, he knew that I couldn’t answer that question because I’m not a GPU designer.

“Well, neither are they,” he perked. “And that’s why they can’t answer it either.”

We'd like to thank David for taking time out of his busy schedule for what was a very interesting discussion about the future of the industry from Nvidia's perspective.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.